CHAPTER THREE

3.1Types of Research Designs

Introduction

We had already indicated that there are some conditionalities that must be met for one to correctly, as it were, apply parametric or? non-parametric statistical tool in the treatment of his data. For instance, the design used in a study will guide the type of statistics to be used. We shall now discuss the different types of designs in this chapter and the appropriate design to use vis-a-vis the appropriate statistical tools to be used in the treatment of data obtained in the particular research design that was used.

Research design can be defined as the proposed or adopted systematic. and scientific plan, blueprint, road map of an investigation, detailing the structure and strategy that will guide the activities of the investigation, conceived and executed in such a way as to obtain relevant and appropriate data for answering pertinent research questions and testing hypotheses. The five major j components or issues which the research design deals with include identifying research subjects, indication of whether there will be the grouping of subjects; what the research purposes and conditions will be, the method of data analysis; and interpretation techniques for answering research questions and or testing hypotheses. So, these are some of the basic purposes of research design, which the researcher should take cognizance of or think through in determining the appropriate design to use. one of the basic considerations’ that will inform the choice of a particular design one should use is the purpose of the study. For example, if a study is intended for establishing causation or cause-effect relationship between an independent and dependent variable, the appropriate design is experimental. If a study is designed to find and describe, explain or report events in their natural settings, as they are, based on sample, data it is a survey. on the other hand, if a study intended to identify the level to which one ‘variable predicts ascend Related viewable such a design is, correlational. Sadie that seek to provide data for mating value judgments about some events, objects, methods, materials, etc. are evaluation design studies. Broadly speaking, all educational and social science research studies can be classified into the following two descripted designs which the researcher normally would adopt in conducting this study: descriptive and experimental design. Within descriptive design are surveys, case studies, etc.. Within experimental design are true and quasi experimental designs which can be broken down further, as we shall see later, when we discuss experimental design studies.

So far, we noted that the design of the study is a blue print or plan of work for a research study and generally it involves the researcher carefully, and systematically putting into consideration some thoughts on each of the five basic and common components of the typical research design indicated above, in this section. As we noted earlier, to make a choice of a particular, design, the researcher must consider what his study is all about with regard, to what he wants to accomplish as part of his study, how many subjects would. be involved, bold they be grouped and what would each group or sample do; what would be the specific and general activities that would constitute the research conditions, and would he be able to ensure subjects’ compliance to these conditions, etc.. what would be the data of the study and what tools can be most appropriately and effectively used in analyzing such data as well as the kind of accurate interpretation that can be made from the data analyzed. After such consideration, he must then reach a decision on earl of them in. terms of whether what is called for in the design to be used is feasible, logical and sensible. This latter issue unfolds when the research is in progress; if things do not go as well as was planned in the design, each of these components can be revisit in based on the reality on the ground. Modification modified due consultation and agreement with your alter made after researcher is fully satisfied and convinced tint rancour after the interest of the aims and objectives Chapter 3 of your thesis titled Research Methodology or Methods’1 under the section design, ensure that you indicate the design of your study by name, describe it and justify its appropriateness for use in the study, include information on how it was used in the study and •so on. You may even need to cite studies similar to yours where the design you selected was successfully used and reported, assuming you used a design that is complex and not familiar to many others.

Types of Research Design

With regard to the normal research process, one can identify two broad types of research designs, experimental (parametric) and descriptive (non-parametric) designs. All studies in education and social science are either descriptive or experimental or in a number of rare cases a combination of both; an aspect of a study can involve mere description of observed events while the latter part of the same study involves testing^ hypothesis under treatment and control research conditions. But in its strictest sense, as noted earlier, all research studies can be classified as falling into descriptive design o. experimental design. Within each of these two broad categories, are sub-categories of research designs, identified under either of the two broad categories, already mentioned.

Descriptive design studies are mainly concerned with describe events as they are, without any manipulation of which caused the even or what is begin observed any study which seeks merely to fled out what is and described it is descriptive case study surely historical research Gallup poll, instrumentation study causal-cooperative studies market research, correlation research evaluation research as well as tracer studies can be categorized as descriptive. For instance, a study in which a researcher develops and validates a test instrument as its major focus based on a certain curriculum, is instrumentation or developmental design. A study in which the researcher is interested in finding out the attitude of school administrators or teachers or union leaders toward free secondary school education, is a survey. For each of the two examples cited above and other descriptive studies like them, researchers are mainly concerned with investigating, documenting, and describing events. When a new procedure, method, tool, etc.. is developed and tried out as a major focus of a study, it is a descriptive study, referred to as instrumentation or developmental design study. Note that the new procedure, method, test, is used to obtain certain relevant information existing or absent (for example, achievements) without the developed procedure, method or test itself causing any observed changes in students’ level of achievements. Similarly, an instrument developed and administered to school administrators on their attitudes toward a proposed free tuition fee for secondary education is a survey because it does not cause or influence their attitude’; the instrument is used merely to elicit information on this subject matter, which is then described. Thus, the thrust of the study here is not on instrument development (not an instrumentation design study) but on using a developed instrument for surveying a particular phenomenon, event, etc. which is then explained, described, documented, etc.. From the foregoing it ought to be apparent to you that most descriptive studies rely on observation technique for gathering information, which is then summarized (analyzed and described. Another type of descriptive design which is gaining research prominence is the case study. In this design, emphasis is given to a limited spread of scope of coverage^ rather than a wider spread; depth is emphasized. A study in which the incidence of sexual harassment at the University of Jiblik is undertaken is a case study. What are the major strengths and weaknesses of a case study? A study which investigates the history and development of a named phenomenon, over a period of is historical (for example, The Child Soldier activities in the Post-Colonial Bush Wars in Sudan). If a historical study is long-drawn out, say for about. 6–12 years, it now becomes a longitudinal study. Market research design is a study on how market forces influence cost of goods and services, productivity, buying preferences, mobility of capital, acquisitions and mergers, etc.. Evaluation studies document the status of events and passes value judgments on those events. Casual comparative studies describe how an event that is not manipulated has probable impact on another event, e.g., a study on the impact which students’ head size has on achievement in mathematics. The major weakness of casual-comparative studies, also called Ex Post Facto studies is that they may lead to wrong conclusions commonly referred to as Post Hoc Fallacy. If in the present example it was: found that students with large heads achieved better in mathematics, what does this really mean? Rubbish. Why?

While descriptive studies have been known to be very useful as a basis for collecting and documenting information for institutional policy formulation or systems-wide improvement and management decision support system, they have been recently criticized for a number of reasons. Most of the reasons are not inherently traceable to descriptive studies themselves as much as to the researchers. For instance, most researchers are not thoughtful and systematic in sloping and using reliable and valid data- gathering instruments for collecting observational or survey data. Even when this condition Is satisfied, there is also the problem of the inherent distortion of information based on data collected as a result of researchers’ over-reliance on questionnaire, interview and case study data which sign with, are most likely to be unstable than stable. for instance, describing the smoking habits of teenage Nigerians, using volunteer samples, at street corners, entertainment clubs, churches, mosques, etc. based on their response to questionnaire data should be taken with a grain of salt rather than being seen as sacrosanct; attitudes to events change and earlier attitudes described become distortions to what they are now. This explains why questionnaire data should not be considered overly rigorous or reliable. We shall discuss in more details the specific and the different kinds of descriptive designs later in this chapter.

Parametric or experimental research designs are those studies which are mainly concerned with -identifying cause-effect relationships between independent and dependent variables of a study. This type of design enables the researcher to test hypotheses upon which valid, reliable, duplicable and verifiable conclusions are premised. An experiment is a planned and systematic manipulation of certain events, procedures or objects, based on the scientific model, such that every event, procedure or object is given a fair and equal chance to prove itself. Such a proof is determined through the careful documentation of observed changes or outcomes, if any. Thus, in an experiment, every element is kept constant, except one whose effects’ the researcher is interested in. Thus, through experimental design, a rigorous and scientific approach to investigating a problem, is made possible. This design calls for establishing research conditions under which an experiment can take place before such a design is said to be experimental. For instance, the design may demand that subjects for the study are randomly drawn and grouped and or the research conditions of treatment and control be randomly assigned to subjects. Experimental design also requires that whatever variables are to be manipulated, such variables are quantifiably and clearly defined and distinct as well as rigorously complied with to avoid contamination: Also, whatever extraneous variables that can mitigate between the independent and dependent variables are identified early enough and such extraneous variables removed or severely minimized. How and what observations (testing, data collection, etc.) are to be made, when, why and by who, are indicated. The type of statistical analysis to be used in testing the hypotheses and reaching conclusions must be relevant and appropriate to the design, type of data and so on. These and other demands which we will discuss later, clearly make experimental studies rigorous.

A central need for experiment in education and social science is ensuring that proper experimental controls have been established and complied with. There are usually three levels of controls in any experiment. The first level of control in an experiment is that of ensuring that all the subjects, prior to the. commencement of an experimental study is homogeneous or equal or the same on the characteristics, which will ultimately become the dependent variable. If the subjects are different on the dependent variable, say achievement in mathematics, clearly, they are not homogeneous or equivalent, even before the experiment starts. Consequently, any difference in the posttest (post treatment test or test given at the end of an experiment) across groups of subjects, which were not homogeneous, abilities may be due to chance rather than as a result of the treatment versus control research conditions. To avoid this problem, subjects, or samples should be randomly drawn from a common population rather than their being selected. When subjects are selected, this leads to the composition of arbitrary and non-probability samples. Selection bias is a major threat to an experiment. Indeed, if research samples are selected, one can no longer consider the design for such a study as true experiment, rather the design now becomes a quasi-experiment. one other way of ensuring a homogeneous sample is through the pre-testing of jests to” obtain base-line data prior to the commencement to the pediment. Based on the base-line data, subjects are equally strutted to treatment or control condition. However, when sampled research subjects are pre-tested, the design is no longer a true experiment but a quasi-experiment design. Quasi- experimental design is less robust and is used when subjects are pre-tested and the randomization of subjects in a study is not feasible. It is a school-friendly type of design in that it can be used in schools without any disruption to the school’s class structure or timetable of academic events. This can be achieved by assigning treatment or control research conditions to selected intact classes, etc..

The second level of control in an experimental design study is the identification of the attributes of the independent and dependent variables and as well as subjects’ compliance with the manipulation and systematic observation of any changes arising from treatment condition. Note that in experiments the control condition is not manipulated but merely observed. From doing these observations, the data obtained are appropriately parametrically treated and used for testing formulated hypotheses,

The third level of experimental control involves the assurance that extraneous variables such as those enhancing or mitigating events or threats to the study are removed or minimized. There, are generally two broad categories of such threats - internal and external validity threats. These threats will be discussed extensively on their own merit later in this chapter. Meanwhile, despite these threats, you need to consider and -decide the specific type of experimental research design you will select and use for your experimental study? You will mostly probably know this for a fact after you have read the remaining part of this section. Because there are many forms of experimental designs, we will need to discuss some of the more important ones in terms of what each one of them involves. However, an extensive and complete discussion of all the currently existing 36 different forms of experimental design studies is not contemplated in this book; such a discussion is beyond the scope of this book. The avid reader, on this aspect, may wish to consult

Cochran and Cox (1983) and or Campbell and Stanley Indeed, Campbell aid Stanley described sixteen specific forms experimental design. We will discuss only four of the most common ones in discussing these forms of experimental design the following symbol will be used

K: represents the random sampling of subjects or the assignment of treatment research condition randomly to an experimental group and control to another group. Remember that when you select your samples, the design of the study is no longer a true experiment. This is why all true experimental samples should be randomly composed.

X: represents the treatment or experimental variable (independent variable) manipulated as part of the research condition for purposes of observing its effect on the dependent variable, if any. Treatment must be carefully and quantifiably described, since its impact, effect, etc. is the major thrust of the experiment. A general broad description of treatment is unacceptable. It must be presented in such a way that another person somewhere else and in another era can duplicate your defined treatment in an identical, proposed experimental research. At the end of an experiment, the analysed treatment data should be reported in line with the research questions and hypotheses both holistically and singly, on the issues raised in the study.

C: represents the control variable, or no treatment condition (placebo). Here, nothing is manipulated. This aspect of independent variable is left naturally to operate without manipulation so as to observe its effect or lack of effect on t dependent variable. Note that the control is the contrast to it treatment. No aspect of the control should be in the represents observation or test administered to subjects and which is a measure of subjects’ performance on the tentative variable. Any tools used for observation must be in me problem of the study, purpose of study, research questions and - hypotheses. Such observational tools must also be valid, reliable and useable. o and o mean pretest and posttest.

S: represents a line between levels and used to indicate equated groups or equivalent groups.

S: Represents the subject in an experimental study; the plural is Ss. E: Refers to ‘the experimental group subjects (i.e.,, the treatment subjects or those who receive X).

3.2True Experiment

In designs of true experiment, the equivalence “of the treatment (experimental) and control group subjects is attained by the random sampling and assignment of subjects to treatment and control conditions respectively. Where this is difficult to do, as in normal school settings where this is usually the case, two equivalent groups, say pupils of two streams of junior secondary three (by their being students in the same class, they may be technically considered to be academically equivalent or homogeneous) may be respectively randomly assigned to treatment or control conditions without the students themselves teeing randomly assigned to groups. The true experimental design calls for no pre-testing of subjects. We will now discuss two forms of true experimental design.

The post-test only equivalent groups design is very powerful and effective design in the sense that it minimizes, if not completely removes, internal and external validity threats to an experiment. Experimental and control groups are equated, on any of the ' research-related, pre-determined variables, through random sampling and grouping. Note that when samples are randomly drawn and grouped, they have a very high probability of being Homogeneous and representative of the populations they were drawn from.

Selection of samples in experiments introduces selection biases, and this is a very serious threat to the experiment, and findings of any study. In the above design, there is no pretest and the randomization process is part of the control to ensure that the selection bias, pretesting effects and contamination by all possible extraneous variables are removed which then assures that any initial differences between both groups, before the commencement of the research treatment conditions is very small and of no serious consequence to the observed outcome, at the end of the experiment. In this design, after subjects are assigned to groups (there can be as many groups as the researcher wants or as is required by the study but they must be made equivalent through randomization), the researcher has to decide which group will recipe treatment and which group will receive control. only the subjects in the treatment group will be exposed to the experimental treatment. The control group receives no treatment (or attributes of treatment) but in all other respects it is treated like the experimental treatment group. For instance, if the planned experimental treatment is teaching with laboratory method while the control is teaching with lecture, these conditions will Very clearly be defined in terms of their characteristics and how teachers will comply with them but more importantly these characteristics must prevail respectively to the two unique groups. The researcher must see to it that there is no mixing of any of the aspects of treatment condition with any of the aspects of the control condition. When this mixing occurs, this results in research condition referred to as subjects’ contamination. This is a very serious methodological shortcoming in research in education and social science or indeed • any research study. This notwithstanding, all other conditions of the experiment will be the same for both groups. The amount of time allotted for actual teaching, the teachers’ qualification and teacher personality, the topics taught, etc. will have to be the same for the experimental treatment group as well as the control group. At the end of the experiment, both groups arc given the same posttest which is a measure of their reaction or response to the dependent variable (achievement on a test, etc.). The mean post-test score of the experimental treatment group subjects is statistically compared with the mean post-test score of the control group subjects using an appropriate parametric statistics or tool. The underlying assumption is that if the means of the experimental treatment group is the same or very close with that of the control, then treatment is of no significance. Put differently, if the mean score of the experimental treatment group and the control group are statistically significantly different (and this difference is too large to be due to chance or to be explained to have arisen from chance factors) one can then assert that the experimental treatment conditions were responsible for the observed result; treatment caused the outcome of the observed differences between the experimental treatment and control group subjects. This design is strongly recommended for use in experimental research in education and social sciences because of its many in-built advantages one of which is the establishment of two homogeneous or equivalent research groups, as has already been highlighted. Also, this design ensures adequate controls for the main treatment effects to operate, thus effects of history is minimized or removed since there was no pre-testing, and little or no maturation since this is not a long drawn out design. For instance, because there is no pretest, there is no interaction effect between pre-test and west-test and no interaction between independent variable (teaching methods). This design is useful because of its rigorousness and flexibility in using it for studies where pre-testing is undesirable and will introduce internal validity threat. The design is used in studies where pre-testing is unnecessary, such as in studies involving early or entry level new intakes to a programme who may have no previous known level of knowledge or any knowledge at all to be pretested for. Note that this design can be extended to include more than two groups if necessary or needed. A major disadvantage of this design is that, while it establishes the differences in performances, achievements etc., at the end of the experiment, it does not allow the researcher the opportunity to observe any change when the study started but only when it ended; the reason for this being that there was no pretest which would have allowed for pre-experimental observation on the kinds of changes in the subjects that pre-existed and so on if any* within the same group of subjects or across different group of subjects. Some researchers have also observed that without pretest’s baseline data, it would be difficult to correctly assume that all the subjects in the study were homogeneous prior to the commencement of the study. They further correctly argue that randomization as we said earlier, can sometimes even if rarely, yield non-homogenous samples.

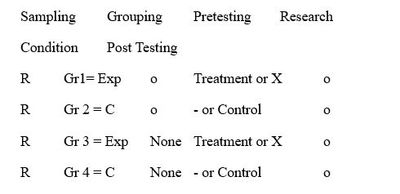

The second form of a true experiment which we will discuss is the Solomon Four Group Experimental Design. This design was established by Solomon (1964) in response to the need for finding an all-embracing and rigorous design which satisfied many of the demands by researchers seeking ways and means of removing maternal and external validity threats to their studies. The design is represented below 268 Conducting Research in Education and the Social Sciences

Solomon Four Group Experimental Design

The major and essential features of Solomon Four-Group Experimental design is that it employs an alternate to one aspect of each line of activities in the design or plan. For instance, Group 4 arrangement with regard to pre-testing is an alternate to Group 2; Group 3 arrangement is alternate to Group 2 as far as the research conditions of treatment and control are concerned. other features of this design is that it overcomes the interaction effect of pre-testing usually present in pre-test post-test design studies. Notice that subject in the experimental Group 3 are not pre-tested but they received treatment while subjects in Group 2 are pre-tested but did not receive treatment. The mean score difference between the pretest and post-test (the dependent variables) are used to determine the interaction between pre-testing and post-testing or the so-called transfer effect of pre-testing in the study. Also, notice that because pretest was administered in this design (to Groups l and 2) data from pretest can be compared with data from post-test, as Gain Scores, thus enabling the researcher to observe and determine the direction of change in the subjects. You may recall as we pointed out in the two previous paragraphs, post-test-only, equivalent ' group experimental design jacks this advantage since it does not include pre-testing. In Solomon Four-Group Experimental Design, the post-test means are used for analysis of variance calculation to determine how significantly different the subjects’ mean post-test scores are: a statistically significantly higher mean post-test score for treatment than control indicates that there is no basis for asserting that the inter-group difference was due to chance. The basis of your argument may well be that reactive effect of pre-testing did not in any way distort or mitigate the post-test data. So, by considering the’ post-test data from control group 3 that did not receive any pre-testing, any contrary argument then does not have a locus stand especially if the mean post-test value of control group –3~ is significantly higher than that of the control group 2. We can correctly assert that the experimental treatment caused the observed outcome (post-test) rather than the transfer effect of pre-testing and interaction between pretest and treatment being the cause of significantly higher achievement. Thus, control group 3 that has no pretest is acting as a balance or alternate to experimental treatment group 1 that had treatment and pre-test. By adding the control group 4, the design gains control over any possible contemporaneous effects that may occur between pretest and post-test. Seen at full glance, this design really involves conducting one experiment twice’,’ once with pre-testing to two groups and once without pre-testing to two other contrasted groups. The two pre-tested groups are contrasted between themselves as far as treatment and control conditions are concerned and the two post-tested groups are contrasted between themselves, as far as treatment is concerned. Then on their own, experimental I group 1; fully contrasts with- experimental group 3 while control group 4 fully contrasts with control group 2. The advantages of this design, in addition to that noted above, have been pointed out by Ali (1986, 1988, and 1989); this design minimizes internal and external validity threats to experimental research, to the barest minimum. But, by and large, the researcher must clearly and quantifiably define what his independent variable(s) are (experimental treatment and control) and how they will be manipulated and complied with during the study. For example, two levels of an independent variable may be guided discovery and use of a particular textbook A (treatment) and lecture/textbook B (control). The dependent variables may be students’ achievements, cognitive styles, and cognitive development in physics; a 2 x 3 factorial or Solomon Four Group Experimental Design Study.

There are two main disadvantages arising from using Solomon Four Group Experimental Design for an experimental study. The first disadvantage is that it is much more difficult to carry out the demands of this design in schools or in many practical situations. Clearly, Solomon Four-Group Experimental Design imposes more costs in terms of time, money, efforts and services than any other design because it is actually two experiments in one design. The second problem is with regard to the enormity of statistical analysis required by this design. There are four groups of subjects but six sets of data collected; given that for the four groups, there are only four sets of complete post-test data and for two groups there are two respective pre-test data. If all the groups had pretest, then there would have been eight sets of data for the groups but as you well know, this is not the case. Consequently, the complete set of data, the post-test is analyzed with analysis of variance statistics while the pretest to post-test data for two groups is analyzed with analysis of covariance for pre-test interaction effect on the post test. Doing these two tests separately is time consuming. So, statisticians have devised one test that can do both analyses simultaneously. The test that combines these two features - analysis of post-test data, and analysis of pre-test data (i.e.,, analysis of pretest-posttest covariates) is called the Analysis of Covariance, ANCoVA, when only one dependent and one independent variable arc involved. The application of this test, ANCoVA, and other parametric tests are long, demanding and rigorous, but some examples have been done for you in chapter 8.’ Because of the severe demands imposed on the researcher who wants to use the Solomon Four-Group Experimental Design, demands which an entry-level researcher may not be able to handle, it is advisable for him not to contemplate using this research design until he is adept and advanced in the techniques of experimental research; something that occurs much later in one’s experimental research experience.

When the variables investigated are numerous, such as in the 2(independent variables) x 3 (dependent variables) factorial or Solomon Four Group Experimental Design, an even more complex analysis called Multiple Analysis of Covariance (MANCoVA) is used for data treatment.

Single Group and Factorial Design: Quasi-Experimental Design

In a large number of real-life research situations, researchers find it difficult, if not impossible, to use true experimental design in carrying out studies. This may be because the scheduling and implementation of experimental treatment conditions or the randomization and grouping of subjects are not possible; in some cases, schools would not allow their programmes to be disrupted or for all their pupils to be used as research subjects. Under these circumstances, the researcher may have to fall back on only using designs which are not truly experimental and, which offer less well and less rigorous controls compared to the true experimental resign. Designs of experiments which offer such less well rigorous senses controls are quasi-experimental. To use these designs effectively well, the researcher should know their main points of strengths and fully take advantage of these while avoiding their weaknesses and pitfalls as much as he can. In other words, this involves knowing which variables have to be adequately controlled for, reducing the sources of internal and external validity threats and so on.

one type of quasi-experimental design is the Non-randomized. Control-Group, Pretest-Post-test-design. The design uses non-randomized groups and this option occurs when the researcher cannot randomly sample and assign his subjects to groups. Thus, he has to use groups already in existence such as groups already organized as intact classes, trade unions, town unions, as distinct co-operative society, women of common interest and of equal socio-economic status, (widows, etc.) members of the same social club, etc. Since the research subjects are not randomly sampled, ‘selection of subjects increases the researcher’s selection biases as, well as sampling error in terms of whether the selected subjects truly represent the population from which they were drawn and whether the subjects, when grouped, are homogeneous or equivalent. To minimize these problems, there is need for selecting subjects on such criteria which would ensure that homogeneity or equivalence of subjects in the different research groups proposed is achieved or seen to have been achieved, at the Beginning of the proposed study. Furthermore, a pretest should be administered at the beginning of the. proposed study and the pretest data can be used for finding out whether the subjects in the different groups are homogeneous (equivalent) or not. If subjects in one group score disparagingly higher than subjects in another group, in the pretest, through sorting and matching or rearrangement, it is possible to establish homogeneity (equivalence) of groups. For instance, this can be more \ - easily done by the researcher mixing high ability with low ability students equally well in all the groups so as to achieve some measure 6f equivalence or homogeneity of groups, before starting the actual research work. At the end of the study, using an analysis of covariance technique, the researcher is also able to compensate for the initial lack of equivalence between groups. Analysis’ of Covariance is a statistical technique which establishes equality of baseline pretest data, before the commencement of the study, and then establishes the covariates between the pretest and posttest, and ultimately determines whether there is any significant difference between groups based on the gain scores, i.e., difference between pretest, and post-test. Let’s look at a diagrammatic representation of the non-randomised control-group pretest-posttest design.

SamplingGroupingPretestingResearch

conditionsPost

testing

- (None)Expt. Gr 1oX i.e., Treatment o

- (None)Control Gr 2o- i.e., Control o

Given that it was not possible to randomly compose and group subjects, you may wish to consider, in the alternative, respectively assigning experiment and control conditions randomly any of the two groups. This can be done by flipping a coin, so as to decide which group is to be the experimental treatment and which group is to be the control group. As much as possible, subjects should not be informed ahead of time about what the research conditions are. Again, they should not be requested to volunteer for any particular group especially if they are aware of what each group will be involved in doing, during the research. When this happens, and subjects are aware of the research condition they will be exposed to, there is a tendency for them to react to this newness effect or awareness and consequently knowingly or unknowingly distort the -full effects which the treatment/control conditions (i.e the research conditions) is intended to have on the dependent variable (the outcome of the experiment). Even when we achieve this anonymity in disclosing research conditions to the subjects, there is yet another problem posed to this kind of design, i.e.,, in an experimental design in which subjects are selected, rather than sampled, and there is pre-testing and post-testing. This is the problem of regression.

Variable to determine their effects on the dependent variable Hypotheses are stated within the framework of a defined and acceptable related and relevant research problem. An appropriate experimental design is used for collecting data scientifically toward testing the stated hypothesis. Data obtained from an experiment are analysed and results used to accept or reject the hypothesis. Conclusions drawn on such sustained acceptances or rejections are then generalized to the entire population similar to the one the sample was drawn from so that the ultimate goals of an experiment are to predict events; control and expect certain events, build up on the body of knowledge and facts within a given area experimented upon, and discover new grounds to explore and exploit toward improving our lives on earth. Because the goals of experiments influence our lives very profoundly, a great deal of careful and important considerations constitute the framework or characteristics upon which the conduct, substance or bedrock of experiments are anchored. There are three essential characteristics of any experiment. These are control, manipulation, and observation characteristics; the so-called center piece of experiments. Read these carefully and understand them. They are important.

Control characteristic aspect of an experiment is concerned with arranging quantifiable and manipulate able research condition and such a way that their effects can be measurably investigated without control, it become impossible to determine the effect of an independent variable on the dependent variable; the control in an experiment are 1) given that two more situation are equal in every respect, except for a factor that is manipulated or added to or deleted from one of the two or more situation any deference appearing (as measured through testing) between the two or more situation is attributable to the factor that was manipulated or added or deleted from. This assumption is called the law of the Single Variable, developed by Mill (1873:2o). Indeed, Mill noted, a long time ago, that:

if an instance in which the phenomenon under investigation occurs, and an instance in which it does not occur have every circumstance in common save one, that one occurring only in the former, the circumstance in which alone the instances differ is, the effect, or the cause, or an indispensable part of the cause of the phenomenon.

The second assumption is that if two or more situations arc not equal but it can be demonstrated that none of the variables is significant in producing the phenomenon under investigation, or if significant variables arc made equal, any ‘difference occurring between the two situations, after the introduction of a new variable to one of the systems, can be attributed to the new variable.

This second assumption is referred to as Law of the only Significant Variable. of the two assumptions above, the second one is important in education and social science because it ‘is very unlikely that an outcome of a study (the dependent variable) or what we observe after manipulating the independent variable can be as a result of only one variable (acting alone without any other variable affecting or influencing the outcome, we observed). Usually, variables act in combination rarely singly, to produce an observed outcome. For instance, why is a political party more successful than others? What variables operated to ensure that a particular student scored highest in a particular mathematics achievement test administered to his class? Education and many social events deal with human beings who are constantly affected by many variables and what we observe about them, therefore, are consequences of many variables, not one Variable. Experiments in laboratories involving chemicals, temperature changes, etc. can be attributed to the law of the single variable but not in education and social science. Fortunately, in education, we can substantially minimize the effects of other variables so as to manipulate one variable, under rigorously controlled conditions, and then go on to determine its effects on the dependent variable. Within the assumption of the law of the only significant variable, other variables are operating along with the manipulated one but it is the case that these variables are controlled out or operate to a minimum, thus leaving the significant variable to dominate and exert its effects on the dependent variable. If a variable is known or suspected to be irrelevant and unlikely to operate in conjunction with a likely significant variable, such an irrelevant variable is ignored. Insignificant variables in academic achievement-related and social science studies include height; hair colour; weight; religion; tribe; shoe size; size of head, toe, hands etc.; dress preferences; musical preferences and so on. These should be uncontrolled for or simply ignored in experiments, in which, for instance, teachers’ personality and effectiveness of teaching methods, comparisons of two or more curricula or social programmes effectiveness are intended to be investigated. on the other hand, significant variables, which can influence experiments and need to be controlled for when one is carrying out experiments on subjects’ social traits, include their interests, study habits, socio-economic attainment, motivation, political affiliations, and reading ability. General intelligence, socio-economic status of parents, and others like these variables are significant variables. To reduce the effects of these kinds of undesired but significant variables, which may not be the main thrust of a study but which can affect the outcome of a study, the researcher must establish controls over them, so that their effects are minimized. The effects of these undesired but significant variables can be removed by ensuring that subjects in the research groups are equally matched on each of these undesired but significant variables before commencing with the experiments on the groups. otherwise, if for instance, subjects in group 1 are better readers than group 2 subjects, group 1 subjects have more interest than group 2 subjects, group 1 subjects have better motivation than group 2 subjects, any difference in achievements, between the two comparative groups, can be attributable not just only to the one independent variable of the experiment manipulated (such as teaching method, teacher personality/effectiveness etc.) but also to the other undesired but significant variables of reading ability, levels of interests and levels of motivation, respectively. As far as the three distinct examples are concerned, control therefore, indicates the researcher’s actions designed eliminate the influence of undesired but significant variables as well as elimination of the differential effects of undesired but significant variables upon the different groups of subjects participating in an experimental study in education and in the social science disciplines. When such controls have been achieved, the confounding, enhancing or mitigating effects of the undesired but significant variables are reduced or removed such that only one variable, the significant independent variable, is then deemed to have caused the observed outcome (dependent variable) of the experiment. There are five ways of controlling for the undesired but significant (pre-existing intervening) variables, which can enhance, confound, mitigate or mix up an observed outcome or effect of an experimental study; they are considered pre-existing because, in a sense, they existed in the subjects or the subjects had them prior to the commencement of the experiment. The five ways are through randomization of subjects, random assignment of subjects to respective groups using a sample-and-assign method to group subjects rather than sample and her then assign subjects to their respective groups; random assignment of treatment or control research conditions to research 8foups, respectively; use of covariance statistics if random sampling of the research groups cannot be achieved; use of covariance statistics if the research design involved pre-testing or if subjects were selected and then grouped for the experimental purposes; matching students and ensuring that they are all equally matched on each of the undesired but significant variables and then assigning them to their respective research groups.

Manipulation characteristic aspect of an experiment is concerned with the researcher’s actual and deliberate total and systematic compliance with all facets of the predetermined or planned events, conditions, procedures and actions which are imposed on the treatment group subjects as the experimental treatment; only treatment is manipulated while the control research condition or placebo is not manipulated. It is expected that in an experiment, the researcher must totally, rather than haphazardly comply with all aspects of the research conditions of experimental treatment (which is manipulated) as well as that of the control (events, conditions, etc. which are not manipulated). Technically, the experimental treatment condition is the hallmark or substance of the independent variable and it is the major thrust or condition that is manipulated for investigation of its effects on the dependent variable. Even when in an experimental research two or three conditions, event or actions constitute the independent variable (for example, for, a study on the Effects of discovery versus lectures on students Recall Abilities in Algebraic Tasks) discovery and lectures are the two research conditions that constitute the independent variable. The researcher may decide that discovery teaching method is the treatment condition. So, it is introduced and manipulated. Both are actively monitored and followed through for their effects on the dependent variable; in this example, discovery method of teaching is the experimental treatment condition, event or action and it is manipulated in line with the researcher complying with the five known characteristics of discovery teaching method, so as to determine its effects on student’s ability to recall algebra they were taught. The control research condition of the experiment, lecture teaching method, is not manipulated. Nonetheless, if an experiment involved two treatment conditions simultaneously (for example; the effects of warm and cold water with high quality and low-quality detergent on washing dirty clothes), both warm and cold-water conditions are simultaneously manipulated respectively using low- and high-quality detergent in washing dirty clothes to find out which one cleans the clothes better. Warm and cold water at one level, and the use of high quality as against the use of poor-quality detergent in both types of water (warm and cold) are independent variables. How well the clothes washed under these water and soap conditions are clean, is the dependent variable. The research data of their separate dual effects on the cleanliness of washed clothes can be determined by multivariate analysis, quantitatively using Multiple Analysis of Variance (assuming that waters of varied temperatures are assigned quantitative values and used to wash similar levels of dirty clothes whose cleanliness levels are determined, and after the washing, the cleanliness of clothes are assigned quantitative values, these quantities are then statistically compared).

Finally, proper and accurate observation characteristic aspect of an experimental design study partly concerns the researcher’s carefulness in determining exactly those attributes or outcomes in a study which have to be measured and recorded. Ideally, such attributes or outcomes to be measured should be quantitative dependent variables. observation, in its most direct operation in the school setting, involves testing and accurately recording students’ achievements. These require that the researcher develops and uses tests that are fair to the taste and valid and reliable for measuring | subject-matter or constructs the tests were supposed to measure. t also requires that we grade and score achievements in fair an accurate manner, using a valid and reliable marking scheme only when we do these that achievement as an index of observation of learning in schools can lend itself to a high level of predictability of learning as well as explanations of how learning occurs. When this is done, quantitative data of experiments will enable us have a better understanding of these independent variables that cause learning to occur, how successful social and economic programmes are and so on. obviously/ we cannot, as you probably know, measure learning per se but we can attach a fixed quantity at a time, place and on a given school subject (achievement) and refer to this quantity as learning. Therefore, the more Careful, thorough and rigorous are the methods of our quantitative measures of achievements in an experiment, the more accurate we would be in measuring learning, predicting learning and understanding how students learn within school” settings. This is also true of socio-economic programmes’ investigations. The sketch below illustrates the framework of the three characteristics of an experiment, i.e.,, three major demands of experiments which we discussed above: Control, manipulation and observation.

Characteristics of an Experiment

Experimental

1: Control component

2: Manipulation component Expt. Treatment only is Manipulated

3: Observation component Careful, thorough and rigorous methods of measurement

Law of the single variable: apples in laboratory expts.

2: Manipulation component Expt. Treatment only is Manipulated

00

Experimental

1: Control component

2: Manipulation component Expt. Treatment only is Manipulated

3: Observation component Careful, thorough and rigorous methods of measurement

Law of the single variable: apples in laboratory expts.

2: Manipulation component Expt. Treatment only is Manipulated

3.3Threats to Experimental Design Studies

In order for an experimental research study to achieve its paramount goals of enabling the researcher make accurate and valid predictions and explanations of events or dependent variables with regard to their causality and so on, the activities which constitute the research itself must possess a high degree of validity and reliability. It may not have reliability and validity if the experiment is subjected to threats, there are two classes of such validity threats. These are internal validity threats and external validity threats.

Internal validity threats to experimental studies are those factors or activities which mitigate, confound and influence the manipulated independent variable of an experiment to the extent that its effects on the dependent variable are ‘altered (enhanced, removed or minimized). Therefore, an experimental study has a high internal validity, if threats which may mar the effects of the independent variable on the dependent variable, are removed or severely minimized. When internal validity threats are enhanced, removed or severely minimized, it would be possible but clearly wrong for the researcher to assert, that it was the experimental treatment that brought about the change in terms of (the observed outcome) its effects on the dependent variable. An assertion which is accurate, verifiable and sustainable in this regard, can only be made if adequate and necessary controls, manipulation and observations, have been carefully thought through and systematically carried out. If the three major characteristics of experimental research (controls, manipulation and observation), which were’ discussed in the preceding section, are accounted for, then the internal validity threats or extraneous variables which mitigate, confound and influence the effects which the independent variable has on dependent variable are removed. Generally, eight internal validity threats or extraneous variables have been identified to have serious alteration or confounding threats to experimental research in education and social science. We will discuss the internal validity threats, first

Pretesting: Pretesting which is the administering of research test to subjects before the actual commencement of a study, sensitizes them to become aware or suspicious of the purposes of the pre-testing aspect of the experiment. In educational settings most students prepare for their examinations from previous years’ examination/question papers. So having been administered a pretest, most students revert to preparing for the posttest by revising questions of the pretest. Ali (2oo4) has reported that at all levels of education, evidence shows that pretest questions are carefully, repetitively and methodically studied by students prior to the posttest, almost to the extent that any observed improved •performance on the posttest by the student subjects may well not be because of the effects of the experimental treatment, partly due to their previous level of preparation. Designs of experiment which have pretests suffer from this internal validity threat. Another source of threat has to do with the newness effect of pre-testing on the subjects. Some subjects may read meanings into the newly introduced pretest which is not normally done in the class or in the community and so become sensitized to the test and react more to it than to the experiment. This phenomenon is commonly referred to as the reactive arrangement or reactive effect of pre-testing on the subjects. Some researchers have suggested that reactive effect of pre-testing can be minimized through scrambling of the posttest items administered to subjects at the end of the experiment. Scrambling can be achieved through renumbering of the posttest items,, using colored paper different from that of the pretest, retrieving all the pretest question papers from the students after the pretest examination, among others.

History: Certain historical and unique environmental events beyond the control of the experimental research but which may have had profound effects on the subjects can confound the effects between the independent and dependent Variable of the study/ Historical events such as human and natural disasters, tsunami, strikes, famine, calamities, economic hardship, sudden changes in -the school year or curricula, undue anxiety,, wars, sustained^ disruption to academic activities can either singly or in combination, as the case may be enhance, disturb or stimulate subjects’ performance on the dependent variable. A longer experimental research study stands a higher*chance of historical events affecting it. Therefore, an experimental study should not be unduly long. one way of avoiding this is to carry out the experiment in phases, complete each phase and report it before embarking on another phase.

Maturation: Subjects, and indeed all human beings, do change with time regardless of what treatment condition they are exposed to. Between the initial test and subsequent test, the subjects may have undergone many kinds of maturational changes since they are influenced by several factors, not just that of the experimental treatment factor. Changes include becoming less or more bored, becoming more or Jess wise, becoming more or less fatigued, becoming more or less motivated, as the case may be. And each or all of these changes may produce an observed dependent variable which is then falsely attributed to the experimental treatment rather than to the maturational changes indicated above.

Instability of Instrument: If in an experimental design study, the instrument for data collection is not valid, reliable and appropriate or if the techniques of using the instrument, as well as observing and recording the data are not consistent and systematic, data obtained from such instrument or techniques are unstable. An instrument, which is faulty, or even one that is precise and valid when wrongly used will yield unstable data. Similarly, haphazard techniques in data collection yield unstable data or data that continue to change with the administration of each instrument. Researchers should guide against any sources of errors such as instrument decay (faulty, imprecision from repeated or overuse, etc.) which poses an internal validity threat to their work. For instance, if research assistants are used for recording observed data, care must be taken to ensure that they know what to observe, when to observe, what to record, how to record, when to stop recording either because of fatigue, boredom and lack of focus on what to record. otherwise, serious errors are introduced, during the use of the instrument, into the experimental data and these become serious internal validity threats. Under no circumstance should the same assistant be used for recording observation data for experimental and control groups. Why did we make this suggestion?

Experimental Mortality: Subjects in an experimental research study may reduce in number between the time the experiment commenced and when it ended. Losses in data can arise from illness, parental request for wards, to discontinue participation, movement of some subjects to another school, unwillingness of subjects to continue with the research, and incomplete data set. Imagine that in a study almost all the losses through mortality, were subjects in the experimental treatment group who had scored low in the pretest. Because those remaining subjects did well in the pretest, they would, most naturally do well in the posttest, not so much because of the effects of treatment as much as the fact that those students who scored low in the pretest did not do the posttest. Mortality is a problem in experiments which span for long periods.

Statistical Regression: If subjects are grouped on the basis of their pretest scores in addition to the interactive effect between pretest and posttest, there is also the problem of statistical regression. Statistical regression is a phenomenon in a pretest- posttest experiment in which extremes of data do affect the gain scores or the results that subjects of the experimental treatment (e.g. research evidence shows that the same subjects who have low pretest score do end-up having high posttest score) whereby the higher gain scores may be misjudged or misinterpreted as arising from treatment effect. The truth of any pretest-posttest design is, in part, that subjects in any comparative group who score highest on the protest are likely to score relatively lower on the posttest while subjects in any research group being compared who score lower on the pretest are likely to score higher on a posttest. Thus, the researcher should be aware that the subjects who scored lowest or highest in the pretest are not necessarily the ones that are going to be the same lowest or highest scoring subjects on the posttest. Therefore, regression as an internal validity threat occurs inevitably in any pretest-posttest design essentially because there is usually a regression of pretest-posttest means of the subjects toward the overall mean of the entire experimental group. Superior gain score differences between treatment and control groups may well not be a direct and entire consequence of the treatment effect on the experimental groups. In fact, gain score differences between groups are always affected, by regression, in any pretest - posttest design study.

Selection Biases Arising from Differential Selection of Subjects: Even when a researcher may not be aware of this, when he selects and groups subjects, certain criteria unwittingly influence who he selects and puts in a particular research group. When this happens, as it is bound to happen, there is the occurrence of none equivalent grouping of subjects prior to the commencement of the experiment. The general tendency, among unwary researchers, is for selecting and assigning better subjects into the experimental Group advantage, which enables these better subjects to do better them the control group subjects who were worse candidates before the commencement of the experiment and who, in any case, would be expected to perform worse at the posttest than- their experimental group counterparts. Under this condition, the researcher selection biases threaten the internal validity of his results since his results may well not have been caused, by the restraint but more so them the fact that, absent initio, the experimental subjects were favored and consequently performed better than the control group subjects and so, as would be expected, did better than control in the posttest result.

Influence of Earlier Treatment Experiences: Many researchers use subjects whose earlier history to exposure to other research -conditions they do not know of or care to find out. Such earlier research treatment influences may well affect experimental research findings either negatively, positively, or selectively to members of a particular comparative research group. For instance, a researcher may unknowingly use and group into experimental group I, more subjects who had just finished an earlier experiment on Communicative English Language Reading and therefore have more reading skills than the control group subjects most of whose members did not participate in the reading experiment project earlier completed by those who participated in the earlier study mentioned. Because of this earlier treatment exposure of reading skills on some subjects and none for their counterpart subjects, there is already an abolition introduction of unfair advantage conferred on the experimental group subjects and unfair disadvantage on the control group. So, on any research study the former are used for, involving reading, an undeserved advantage is conferred on them while for the latter an undue disadvantage is conferred on them in later experimental work involving, earlier treatments such as word problems, as in mathematics, English language and so on. To avoid this problem researchers should find out about earlier experimental experience of their proposed subjects so as to ensure that these experiences ^ fairly or evenly well distributed in the population they want to work with, and they can then randomly sample from that population.

External Validity Threats

External validity threats are those factors or events which affect an experiment and which minimize a study’s usefulness, relevance and practical applications of the results so much so that the results and conclusions of the experiment cannot be generalized to the real world; what use is an experiment to man if its findings have no practical value? Therefore, before embarking on a study, the researcher must ensure that the ultimate results of his work should be useful, relevant and of practical application to the social science and educational setting, by asking himself such questions as: To what real populations, school settings, administrative or social group settings, political settings, experimental variables, measurement variables, research analytical variables can the research findings and conclusions of my proposed study be generalized. If the answers to each of these questions is none, then the researcher should not embark on his proposed experiment. Even when his findings and conclusions are generalizable to the population, there are factors which threaten the substance of such generalizations. He must take care of the factors which threaten the study’s external validity (extent of generalizing one’s research findings to the overall popup these threats are discussed below:

Hawthorne Effect: situation under which experiment in education and social science proceed need to be controlled so that experiment can go on as naturally as possible rather than their going on under contrived conditions or because of subject’s response to novel conditions induced by an experiment. When experimental conditions are not adequately controlled, subjects’ reactions and responses to experiments may become distorted by the mere fact of the introduction of the research conditions. By subjects becoming aware of the new situation created by the introduction of an experiment in their class, village school, football team and so on, they may become resentful, feel preferred, feel rejected or inferior to other research group or even the population that was not used; some subjects may question, why us, not them? Any of these reactions and responses may leave some effect on the subjects. The effects such responses have would depend on how the subjects were affected by the newly introduced research-induced situations. Subjects’ knowledge of their participation in an experimental treatment, as the treatment group, may engender their contrived or biased response to the introduction of this new situation rather than as a result of the effect which the newly introduced experimental treatment had on the experimental group subjects. When subjects respond to the newness effect of the experimental treatment rather than to the experimental treatment itself, this is referred to as Hawthorne effect and it is a serious external validity threat to an experiment. Similarly, when control group subjects respond to their knowledge of the fact that nothing is done to them (they are the control) while something is done to their treatment classmates, they become non-challant about the research study or they become uncooperative with the researcher and his work. Such a non-challant response arises not as a result of the control condition but more so as a result of knowledge that nothing was done to them or happening to them. This response is the placebo effect on the control group subject. Hawthorne effect was first observed in 194o following experiments done at the Hawthorne Plant of Western Electric Company in Chicago and reported by Roethlisberger and Dickson (194o). In this study, the lighting conditions of three departments in which workers inspected small parts, assembled electrical relays and wound coils were gradually increased. It was found that production in all the three departments increased as the light intensity increased. After a certain level of high production level was reached, the researcher progressively reduced the intensity of light in the departments to determine the effect it would have on productivity. To the surprise of the experimenters, they found that productivity continued to increase. The researchers then concluded that the newness effect of introducing light to the employees and the mere awareness of their participating in the study, rather than the experimental treatment of increased lighting conditions led to the increase of production gain; the now so-called Hawthorne effect. Further experimental studies of the above nature done at the plant, using varying rest periods and varying the length of working days and weeks, respectively, produced the same Hawthorne effect. The reactive effect of subjects to the newness of an experiment has also been observed in medical research. Medical research subjects generally react to whatever the drug they receive is as treatment, regardless of whether the drug is the real one being tested (and which contains the pharmaceutical preparation) or the ones which are placebos (these are inert, harmless and blank drugs but look like the one containing the required pharmaceutical preparation being tested). By masking the real drug (experimental) from the inert ones (placebo), researchers are able to reduce subjects’ reactive effects to the experimental treatment since they do not know which drug is the potent one and which one is placebo (inert, harmless and blank drugs which looks like the potent one but which are actually worthless mimics, (the placebo). Again, if it is concealed from the subjects, i.e., the knowledge of who is in the placebo or experimental condition, at the end of the experiment, based on the observations made on both groups of patients (note that the experimenter does not participate in the study, a condition referred to as double blind), it is possible to determine how effective the experimental drug is compared to the placebo. By doing this, the problem of some patients reacting to the newness effect of the study than clinically to the potency of the drug used as treatment received (most people tend to feel better or say they feel better after they received drug treatment, regardless of the efficacy of the drug used) is minimized. But in education and social science research, we do not have the luxury of placebo, i.e., not administering anything to student subjects in the school in the control group or even worse, administering of fake control conditions to them. It is possible to minimize Hawthorne effect and other situations which contribute to external validity threats. Clearly a phased-in, fairly longer study, say, five to twelve months, would reduce the newness effect, by wearing off subjects’ reactive effects to treatment, thus eliminating Hawthorne effect. But it is unwise to do so because longer studies lead to mortality, maturational, and historical problems which then constitute themselves into internal validity threats. A more useful suggestion that minimizes Hawthorne effect and other situational external validity threats is to hold all the situations affecting experimental and control groups constant; randomly draw and assign treatment and control conditions to groups; do your best to manipulate subjects to the extent that they do not know that any research work, as far as the independent variable is concerned, is in progress. There are several ways of holding experimental research conditions constant for all the subjects in an experiment. These include treating them alike on all things and letting them know that this is so, except with regard to the treatment aspect of the independent variable. For instance, on a teaching effectiveness method study, duration of teaching; actual teaching time; teacher qualification and personality; topics covered and their scope; tests; apparatus used; language of instruction; learning environmental conditions, etc. must be identical for experimental as well as the control group. Again, if assistants are used in the research, they must be trained on what to do, how to do them with little distraction and how to do them effectively. They can be brought into the class or community where they will assist in the particular research study far in advance of the commencement of the experiment, so as to minimize the newness effect of their presence in class or the community during the actual experiment, since the subjects would have become used to them, with time.

Population Validity: In order to be able to make a valid assertion, based on one’s experimental results, about the population, the sample used in a. study must by typical of the population from which it was drawn. Sometimes, the population experimentally accessible (accessible population) to the researcher may not truly represent the typical population; for instance, primary school children from rich and affluent homes of Victoria Island, Lagos, do not typically represent the primary school population in Nigeria but the former group may be the only one that is readily accessible to the experimenter. Any generalization to the Nigerian primary school population based on samples drawn from experimentally accessible population creates external validity threat. on the other hand, a use of target population would permit valid generalization, based on samples drawn, about the target population. Target population is the typical population to which the researcher wants to generalize his conclusions and, consequently, draws his sample from that particular identified target population. Sample for a target primary school population would include pupils from a variety of socio-economic conditions; schools and pupils from all the different parts of the country; a variety of school types and so on. Usually, to obtain a sample which reflects the target population is difficult. This can be overcome by identifying the population, the major attributes of the population and using the specific attributes of the identified population as sampling frames, zones and or clusters, from each of which sample representatives of the population is drawn. For instance, if there are three categories of primary schools in Nigeria, say, well established, less well established and poorly established primary schools, each category is listed and its population and samples representing the three categories of primary schools are respectively drawn. If location is an important variable or attribute, then Nigeria may be zoned first into, say, five equal locations, clusters or zones, and primary schools belonging to the three categories mentioned earlier are identified and then randomly sampled from, i.e., each of the sampling frames, geopolitical zones or clusters into which the country was divided.

However, there is a problem about the suggestion made above. It is that of logistical convenience. Clearly, zoning, sampling, identifying population criteria of a very large country and sampling from identified criteria is a difficult time-consuming task; difficulty whose implications/are enormous in terms of time, cost, ability to manage the conduct of the study and so on. Despite these difficulties, if a study is going to be generalized to the target population it is better to have reliable knowledge about a more restricted population of this target, even on a zone-by-zone basis (although even in the zones some areas may not be included in the sample) than to have a far more restricted unrepresentational sample (pupils of primary schools in Victoria Island, Lagos). Certainly, it is wrong and misleading to use conclusions generated from studying unrepresentational sample; samples drawn from experimentally accessible population cannot yield data that can be reliably used to make generalizations about the target population.

Experimental Environment Conditions: The conditions under which experimental research takes place is equally important as the experiment itself. Extreme variations in the environments of different schools, home, communities, cities, and tribes, programme administration may singly or jointly influence outcomes of experiments. Similarly, outcomes of experiments influence school or community environments. However, what is important to the researcher before proceeding with his research, as far as the experimental environment of his study is concerned, is in his making sure that the environment implicit in his study are those existing or attainable in typical schools, community, home, etc. in the area he is doing the study. An experimental environment in which calculators, photomicrographs, computer simulated teaching episodes, or strange external research officers in a village etc. are used in, are not typical environments, except in rich, well-established suburban primary schools (and these ignore the rural, depraved schools, or situations rural folks may not be able to handle).

Finally, all the types of threats discussed in the foregoing section highlight the enormity of demands, involvements and expectations of work that is of experimental nature, in education and social science research. Knowing what these threats are, is important. But far more important are ways and means through which the researcher can control and minimize, if not eliminate their effects, on the experiment carried out. These specific ways and means have been described in this section. Having indicated the design of experiment for the study you want to undertake, you must understand the implications or demands implicit in the chosen design. You should also anticipate what the threats to your experiments are likely to be as well as how the potential threats will be minimized, if not removed.

3.4Types of Descriptive Research Design

Having discussed the different types of experimental design, their characteristics and threats to their validity, it is only fair that we give equal emphasis to types of descriptive research designs in this book. It is as well fair that we do so because a large number of studies in education and social science use descriptive designs. The need for understanding them and how to improve on them is therefore, important if their sustainable and useful knowledge value and contributions to education and social science are to be enhanced, for entry-level researcher, it is the firm belief of this author that the comprehensive discussion of types of descriptive research design, with regard to their nature and scope, will help in the envisaged enhancement. Consequently, we will for now discuss survey, case study, evaluation and causal-comparative designs, even though there are other types of descriptive research design, such as gallup poll, correlational studies, ex post facto studies, market research, impact studies, evaluation studies, longitudinal studies, and so on. We will discuss this other design separately but more briefly.